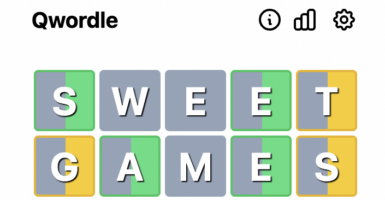

Meta Is Teaching Technology Not To Lie

Meta used machine learning to teach its AI Cicero not to lie when playing the highly complex and nuanced board game Diplomacy.

This article is more than 2 years old

When a human makes the decision to tell a lie, they can have many different rationales for constructing a fib. The person’s motives could range from wanting to manipulate someone else’s actions to do something that ultimately benefits their own agenda, all the way down to withholding information to protect someone that they care deeply about and are trying to spare pain, suffering, or some sort of misery. Interestingly, Meta has had some breakthroughs with a project called Cicero, and it has successfully taught itself not to tell any lies, which is a groundbreaking advancement in the artificial intelligence arena.

Towards the end of 2021, Meta announced that it was going to be developing artificial intelligence that was going to be a new machine learning tool that could play the board game Diplomacy with human players, and, hopefully, be able to compete at a high level. According to Vice, “In its announcement, the company makes lofty claims about the impact that the AI, which uses a language model to simulate strategic reasoning, could have on the future of AI development, and human-AI relations.” At first glance, one might think, what’s the big deal computers have been able to play games for a long while now, what’s so special about the ability to play a board game?

To answer that question, you need to understand how the game is played, which shines a light on what type of cognitive ability is needed to achieve successful results in this board game. And thus, explaining why Meta’s claim about Cicero is so groundbreaking.

The article from Vice describes Diplomacy as, “a complex, highly strategic board game requiring a significant degree of communication, collaboration, and competition between its players. In it, players take on the role of countries in the early years of the 20th century in a fictional conflict in which European powers are vying for control of the continent. It is mechanically simpler but, arguably, more tactically complex than a game like RISK.”

You cannot simply run a computer simulation 30 million times and solve the game like other zero-sum games in the past. There are way too many variables that can change the outcome because of the human tendency to do whatever we feel it takes to stay alive or win at any specific task.

One of those tendencies, especially in mock war games, is spreading misinformation through the competitors, otherwise known as lying. However, when examining the players in the game that are at the top levels of the game will tell you that they learned early on that lying is not a path to victory and, in fact, it is an express lane to defeat.

Top players all agree that being honest helps with building alleys and strong relationships. Even when you must attack an alley or work with a former enemy, the player’s honesty throughout the course of the game pays bigger dividends to the results of the game. Meta’s Cicero has learned this same lesson on his own and will not lie to a competitor to advance their game in the short term.

That’s right, Meta’s artificial intelligence was able to make that connection between honesty and success in the game. That doesn’t mean Cicero will broadcast every truth prior to making a move and disclosing information that would harm its position in the game. Instead, if it is asked a direct question, it will give you a truthful answer, but you will need to specifically ask the right question to obtain that information.

That is somewhat of a scary thought that through the Meta-developed artificial intelligence’s experiences, they were able to draw the conclusion that lying would be harmful to be able to accomplish its goal, which in this case is control of the entire world (the world in the board game, that is). This reminds me of the Matthew Brodrick film War Games from the 1980s. Couldn’t they have programmed it to play Candyland or something like that instead of a takeover the planet simulation game?

Speaking about Meta’s achievement, Vice also stated, “I’d also add there were lots of other, non-AI ethical considerations too—the level of consideration we gave to privacy was extreme, redacting anything that could be remotely personal…The internal controls on that were really impressive, and the team in general took the approach that ethical research considerations were key parts of the challenge, not obstacles to success.”

All that said, it’s worth noting the plot lines of many science fiction movies. Terminator, iRobot, and too many more to count, all had the same countermeasures in place, and they all took unexpected turns where the ones that paid the price were humans. Ultimately, the public will have to see what becomes of these breakthroughs and if we will see artificial intelligence in our homes in the very near future.